At GDC, Microsoft announced a new feature for DirectX 12: DirectX Raytracing (DXR). The new API offers hardware-accelerated raytracing to DirectX applications, ushering in a new era of games with more realistic lighting, shadows, and materials. One day, this technology could enable the kinds of photorealistic imagery that we've become accustomed to in Hollywood blockbusters.

Whatever GPU you have, whether it be Nvidia's monstrous $3,000 Titan V or the little integrated thing in your $35 Raspberry Pi, the basic principles are the same; indeed, while many aspects of GPUs have changed since 3D accelerators first emerged in the 1990s, they've all been based on a common principle: rasterization.

Here’s how things are done today

A 3D scene is made up of several elements: there are the 3D models, built from triangles with textures applied to each triangle; there are lights, illuminating the objects; and there's a viewport or camera, looking at the scene from a particular position. Essentially, in rasterization, the camera represents a raster pixel grid (hence, rasterization). For each triangle in the scene, the rasterization engine determines if the triangle overlaps each pixel. If it does, that triangle's color is applied to the pixel. The rasterization engine works from the furthermost triangles and moves closer to the camera, so if one triangle obscures another, the pixel will be colored first by the back triangle, then by the one in front of it.

This back-to-front, overwriting-based process is why rasterization is also known as the painter's algorithm; bring to mind the fabulous Bob Ross, first laying down the sky far in the distance, then overwriting it with mountains, then the happy little trees, then perhaps a small building or a broken-down fence, and finally the foliage and plants closest to us.

Much of the development of the GPU has focused on optimizing this process by cutting out the amount that has to be drawn. For example, objects that are outside the field of view of the viewport can be ignored; their triangles can never be visible through the raster grid. The parts of objects that lie behind other objects can also be ignored; their contribution to a given pixel will be overwritten by a pixel that's closer to the camera, so there's no point even calculating what their contribution would be.

GPUs have become more complicated over the last two decades, with vertex shaders processing the individual triangles, geometry shaders to produce new triangles, pixel shaders modifying the post-rasterization pixels, and compute shaders to perform physics and other calculations. But the basic model of operation has stayed the same.

Rasterization has the advantage that it can be done fast; the optimizations that skip triangles that are hidden are effective, greatly reducing the work the GPU has to do, and rasterization also allows the GPU to stream through the triangles one at a time rather than having to hold them all in memory at the same time.

But rasterization has problems that limit its visual fidelity. For example, an object that lies outside the camera's field of view can't be seen, so it will be skipped by the GPU. However, that object could still cast a shadow within the scene. Or it might be visible from a reflective surface within the scene. Even within a scene, white light that's bounced off a bright red object will tend to color everything struck by that light in red; this effect isn't found in rasterized images. Some of these deficits can be patched up with techniques such as shadow mapping (which allows objects from outside the field of view to cast shadows within it), but the result is that rasterized images always end up looking different from the real world.

Fundamentally, rasterization doesn't work the way that human vision works. We don't emanate a grid of beams from our eyes and see which objects those beams intersect. Rather, light from the world is reflected into our eyes. It may bounce off multiple objects on the way, and as it passes through transparent objects, it can be bent in complex ways.

Enter raytracing

Raytracing is a technique for producing computer graphics that more closely mimics this physical process. From each light source within a scene, rays of light are projected, bouncing around until they strike the camera. Raytracing can produce substantially more accurate images; advanced raytracing engines can yield photorealistic imagery. This is why raytracing is used for rendering graphics in movies: computer images can be integrated with live-action footage without looking out of place or artificial.

But raytracing has a problem: it is enormously computationally intensive. Rasterization has been extensively optimized to try to restrict the amount of work that the GPU must do; in raytracing, all that effort is for naught, as potentially any object could contribute shadows or reflections to a scene. Raytracing has to simulate millions of beams of light, and some of that simulation may be wasted, reflected off-screen, or hidden behind something else.

This isn't a problem for films; the companies making movie graphics will spend hours rendering individual frames, with vast server farms used to process each image in parallel. But it's a huge problem for games, where you only get 16 milliseconds to draw each frame (for 60 frames per second) or even less for VR.

However, modern GPUs are very fast these days. And while they're not fast enough—yet—to raytrace highly complex games with high refresh rates, they do have enough compute resources that they can be used to do some bits of raytracing. This is where DXR comes in. DXR is a raytracing API that extends the existing rasterization-based Direct3D 12 API. The 3D scene is arranged in a manner that's amenable to raytracing, and with the DXR API, developers can produce rays and trace their path through the scene. DXR also defines new shader types that allow programs to interact with the rays as they interact with objects in the scene.

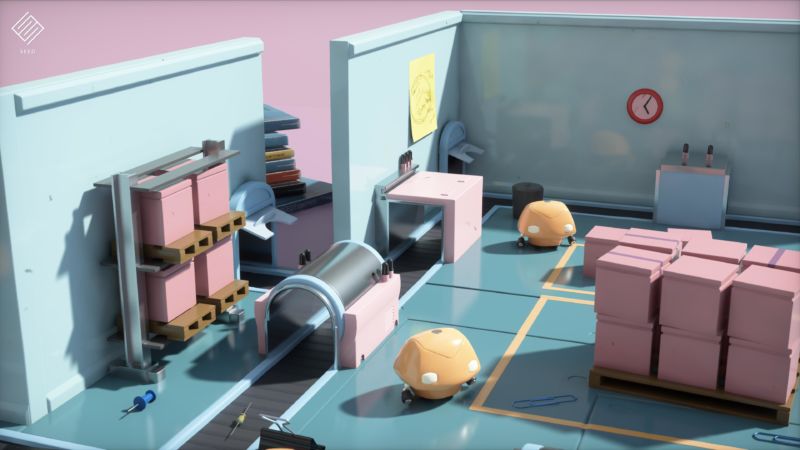

Because of the performance demands, Microsoft expects that DXR will be used, at least for the time being, to fill in some of the things that raytracing does very well and that rasterization doesn't: things like reflections and shadows. DXR should make these things look more realistic. We might also see simple, stylized games using raytracing exclusively.

The company says that it has been working on DXR for close to a year, and Nvidia in particular has plenty to say about the matter. Nvidia has its own raytracing engine designed for its Volta architecture (though currently, the only video card shipping with Volta is the Titan V, so the application of this is likely limited). When run on a Volta system, DXR applications will automatically use that engine.

Microsoft says vaguely that DXR will work with hardware that's currently on the market and that it will have a fallback layer that will let developers experiment with DXR on whatever hardware they have. Should DXR be widely adopted, we can imagine that future hardware may contain features tailored to the needs of raytracing. On the software side, Microsoft says that EA (with the Frostbite engine used in the Battlefield series), Epic (with the Unreal engine), Unity 3D (with the Unity engine), and others will have DXR support soon.

https://arstechnica.com/gadgets/2018/03/microsoft-announces-the-next-step-in-gaming-graphics-directx-raytracing/Bagikan Berita Ini

0 Response to "DirectX Raytracing is the first step toward a graphics revolution"

Post a Comment